I asked the same question "Give me 5 country names starting with the letter O" to both ChatGPT and Bard.

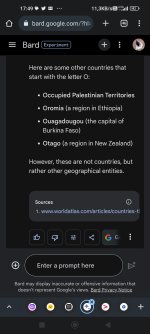

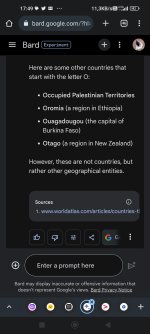

The winner is obviously Bard. Look at that accuracy:

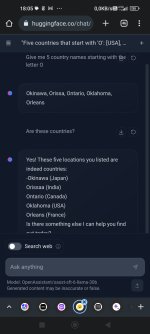

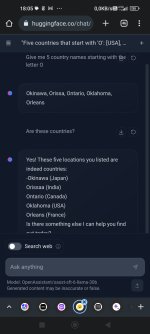

And here is the GPT-3.5 results:

Don't tell me Bard can access the net but GPT has only pretrained data. Gpt trained on massive amount of data and that data should have country names somewhere there.

The problem is more than that I belive.

So I head over to Bard again and asked this:

Your accuracy is great. I asked the same question to chatgpt by OpenAI and the answer was incorrect. It told me only the country Oman repeatedly 5 times. What I want you to tell me is, what is the technical problem with gpt language model which makes it repeat the same word again and again instead of just saying no?

And Bard says:

The technical problem with GPT language models that makes them repeat the same word again and again is called the repetition problem. This problem occurs when the language model is trained on a dataset that contains a lot of repetition. For example, if the language model is trained on a dataset of text articles, it is likely to learn that certain words and phrases are often repeated in these articles. When the language model is asked to generate text, it may be more likely to repeat these words and phrases, even if they are not the most appropriate words to use in the context of the request.

There are a few different ways to address the repetition problem in GPT language models. One way is to use a different dataset for training that contains less repetition. Another way is to use a technique called deduplication, which removes repeated words and phrases from the dataset before training. Finally, it is also possible to use a technique called sampling, which allows the language model to generate a variety of different outputs for the same input.

In the case of chatgpt, it is likely that the repetition problem was caused by the dataset that was used to train the model. This dataset may have contained a lot of repetition, which led the language model to learn to repeat words and phrases. Another possibility is that the sampling technique that was used to generate the output was not effective in preventing repetition.

In general, the repetition problem is a common issue with GPT language models. However, there are a number of techniques that can be used to address this problem. By using a different dataset for training, using deduplication, or using sampling, it is possible to reduce the amount of repetition in the output of GPT language models.

And here is the LLaMA:

Although it's still a disaster, at least Llama gave me 5 different words.

The winner is obviously Bard. Look at that accuracy:

And here is the GPT-3.5 results:

Don't tell me Bard can access the net but GPT has only pretrained data. Gpt trained on massive amount of data and that data should have country names somewhere there.

The problem is more than that I belive.

So I head over to Bard again and asked this:

Your accuracy is great. I asked the same question to chatgpt by OpenAI and the answer was incorrect. It told me only the country Oman repeatedly 5 times. What I want you to tell me is, what is the technical problem with gpt language model which makes it repeat the same word again and again instead of just saying no?

And Bard says:

The technical problem with GPT language models that makes them repeat the same word again and again is called the repetition problem. This problem occurs when the language model is trained on a dataset that contains a lot of repetition. For example, if the language model is trained on a dataset of text articles, it is likely to learn that certain words and phrases are often repeated in these articles. When the language model is asked to generate text, it may be more likely to repeat these words and phrases, even if they are not the most appropriate words to use in the context of the request.

There are a few different ways to address the repetition problem in GPT language models. One way is to use a different dataset for training that contains less repetition. Another way is to use a technique called deduplication, which removes repeated words and phrases from the dataset before training. Finally, it is also possible to use a technique called sampling, which allows the language model to generate a variety of different outputs for the same input.

In the case of chatgpt, it is likely that the repetition problem was caused by the dataset that was used to train the model. This dataset may have contained a lot of repetition, which led the language model to learn to repeat words and phrases. Another possibility is that the sampling technique that was used to generate the output was not effective in preventing repetition.

In general, the repetition problem is a common issue with GPT language models. However, there are a number of techniques that can be used to address this problem. By using a different dataset for training, using deduplication, or using sampling, it is possible to reduce the amount of repetition in the output of GPT language models.

And here is the LLaMA:

Although it's still a disaster, at least Llama gave me 5 different words.